Latency Benchmarks

libriscv is a unique emulator that focuses heavily on low-latency emulation. Most game scripts don't do any heavy computation, and when they do, it is often outsourced to the host. As an example, LLM computations don't happen in WASM sandboxes, rather it happens outside of the sandbox in order to benefit from CPU- and hardware-specific features, GPU support and such.

Function call latency

When the game engine wants to enter our script, a fixed cost has to be paid to enter and then leave the sandbox again. Sandboxes provide safety, and requires some setup in order to activate itself, and then afterwards some work to wind down. By call, we mean a function call into the VM, so a VM function call. It's just like a regular function call, but it happens inside our safe sandbox.

-7f142823ab30c84f1930fecd45cd63f2.png)

The graph shows the cost attached to having 0 to 8 integral function arguments.

System call latency

Every time you need to ask the host game engine to do something, the system call latency must be paid. The lower it is, the more often it can make sense to make a call out to perform some task. This task is always native performance.

-95c1a577cf3432ce646640553034976e.png)

The graph shows the cost of calling out to the engine with the given 0 to 7 number of function arguments. It's beneficial to have a low treshold for performing system calls.

Real-world example

One real-world example script function is one that computes a rainbow vertex color. The game engine doesn't know that, but the point is that we are scripting a new entity in our game, one that should be a rainbow using vertex coloring. For that we needed to use std::sin() 3 times for the 2D signal.

-0360016107273edcf958c668ad14a7cc.png)

-c03945962bfa56b3f0f0a40edc29512d.png)

The substantially lower system call latency made it a no-brainer to make sin a system call, and as a result, the overall time needed is at least 5x to 7x lower than other emulators.

Finally, we are comparing WebAssembly without game engine integration (so just calling into emulator directly) against a fully integrated libriscv inside another game engine, with extra sandboxing measures (like maximum call depth). So, we can safely assume that WebAssembly needs additional nanoseconds in a real game engine integration. It's better to sabotage ourselves a little, than the opposite!

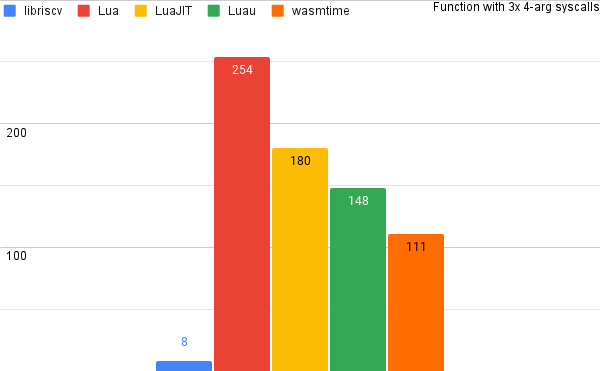

A made-up example

Nobody has the energy to fully implement a solution of all these solutions into a real game engine just for a benchmark, but if we just take them as-is, and measure overheads. Then we imagine that we're calling an argument-less function that again makes 3x system calls with 4 arguments each:

In this made-up example we can't see the real measurements, but we can still see the tendencies of each solution. Interpreted libriscv is an order of magnitude faster than other solutions in this particular instance.

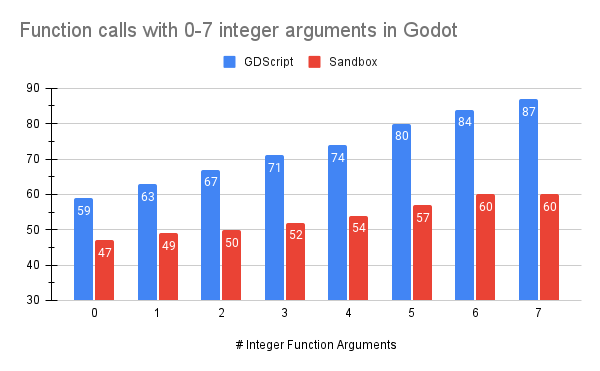

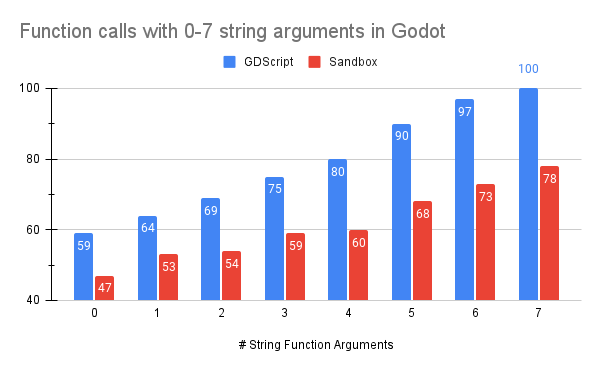

Godot engine integration

When integrated into the Godot engine, the fixed overheads outside of our control are 40ns for each call. This may be improved if and when the Sandbox is embedded directly into Godot. For now these are the reality:

As a sandbox we have to translate and verify everything, which is costly when Variants have so many rules. Matching GDScript in latency, which is not a sandbox, is great!

Since libriscv is much faster than GDScript at processing, we can say that it always makes sense to call into the sandbox, compared to staying in GDScript. At least when performance is a factor.